Baylor University

Department of Electrical & Computer Engineering

Secured and Dependable Intelligent Systems (SeDIS) Lab

Research Mission

The objective of the Secured and Dependable Intelligent Systems (SeDIS) Lab is to pursue cutting-edge research by proposing theories, methods, tools, and protocols to guarantee and enhance safety, quality of service, and security in intelligent and complex cyber-physical systems (CPS) and edge computing paradigms by exploring formal methods, machine learning techniques, blockchain, and cybersecurity principles. The application domains of our research are Robotic applications, Autonomous systems, edge computing paradigms, and safety-critical health applications.

Research Projects

Here are some projects that we are currently working on:

Formal methods for robotics and CPS

Formal methods employs mathematical techniques to design, specify, and verify software and hardware systems. With formal methods, we model complex cyber-physical systems as mathematical entities, to verify and monitor system properties and correct behavior. Compared to other techniques such as testing, simulations, etc., which usually sample subset of possible inputs, formal verification can mathematically prove system's correctness under all possible scenarios leading to the identiication of all known and hidden bugs. In this regard, we can guarantee and improve system's availability, reliability and robustness by proving that the system will not fail with a run-time error.

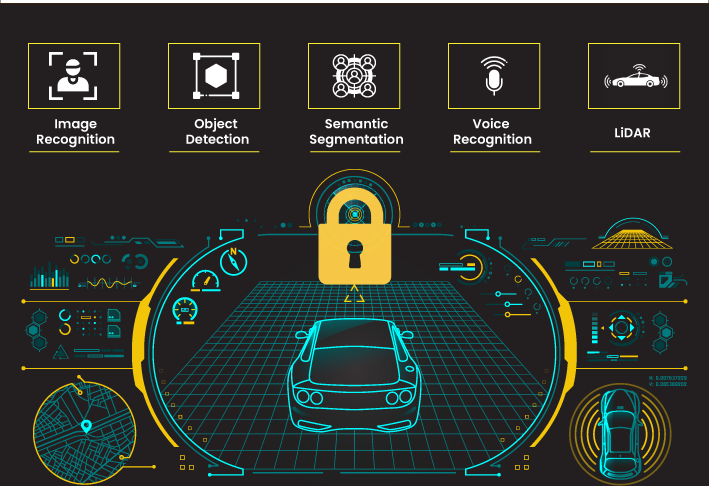

In this project, we investigate and propose formal verification techniques, such as model checking, runtime monitoriing, etc., to be analyze and monitor mission specifications and planning at runtime. We combine these techinques with other methods such as Satisfiability Modulo Theories (SMT) and Advanced Machine Learning algorithms to explore security- safety- and privacy-aware motion planning in critical autonomous systems.

Privacy Preservation of Internet of Things (IoT)/Internet of Autonomous Systems (IoAT)

As IoAT devices continually proliferate in homes, businesses, and critical infrastructure, the desire to ensure safety, privacy, and reliable operation has continually become paramount. Consistently, many IoAT devices collect and transmit sensitive personal or organizational data, such as health metrics (from smart wearables), home activity patterns, or financial data (from connected payment systems). Autonomous systems, such as drones, self-driving cars, or industrial robots, rely on these real-time data for decision-making. If compromised, malicious users could access critical operational or personal information leading to potential damage to the user.

In this project, we leverage blockchain technology, encryption techniques, machine learning algorithms and digital twins technology to design and propose protocols for user authentication and privacy preservation. With the proposed algorithms, we aim to mitigate various systems risks and attacks across all levels of abstractions of any given IoAT application. We apply the techniques proposed in saftey-critical applications such as healthcare applications, insurance infrastrucures, financial systems, etc.

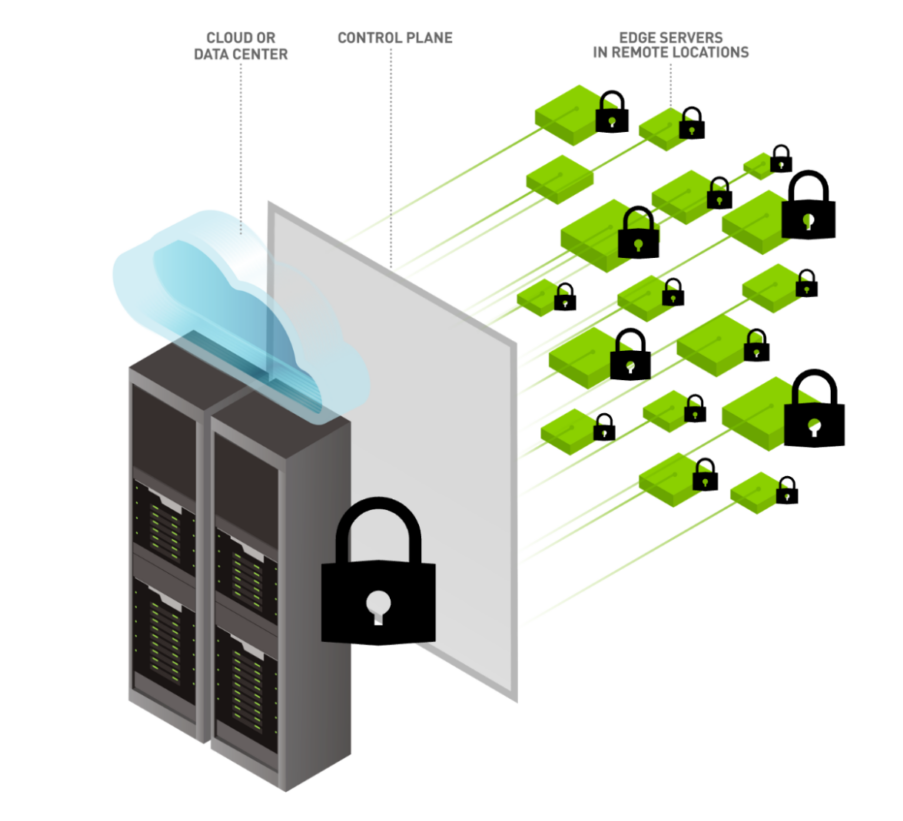

Cybersecurity of Edge Computing Paradigms

While edge computing reduces latency, improves performance, and allows for real-time processing, it introduces unique cybersecurity challenges and vulnerabilities. The decentralized nature of edge computing creates a larger attack surface and requires robust security measures. Edge computing involves a wide network of heterogeneous edge devices and platfrom with each device introducing a potential entry point for attackers. With exchange of sensitive data across various edge computing platforms, there is the need to design systems that addresses data privacy and any communication vulnerabilities.

In this project, we leverage data encrytption techniques, intrusion detection and prevention systems and blockchain and machine learning algorithms to design and propose authentication and enhanced intrusion detection and encryption techniques to guarantee resilience and reliabilty of edge paradigms while considering the limited computation resources of IoT devices.

Explainable AI for Robotics

Autonomous Systems are susceptible to failure in the discharge of their duties. To ensure safety, trust, reliability, and transparency iin these AI-driven systems, we Explainable AI (XAI) techniques. XAI is a set of methods and techniques that make the decision-making processes of AI models transparent and interpretable to humans. In the context of autonomous systems, XAI plays a critical role in identifying, diagnosing, and preventing system failures by providing insights into why and how decisions are made by AI-driven systems.

In this project, we focus on post-failure diagnotics and real-time interpretability in autonomous systems. After a failure, XAI techniques can identify which inputs, model decisions, or environmental conditions contributed to the failure. Likewise, XAI can provide real-time explanations for the decisions an autonomous system is making, allowing operators to intervene if the system is heading towards a potential failure. We specify the tasks to be performed using temporal logic and use machine learning-based explainaility techniques such asn LIME, SHAP, Attention mechanisms, etc. to generate interpretable approximations of complex systems which align with knwon ethical AI frameworks.